Over the last few months a small team of us at Cliqz have been building the new Ghostery browser as a Firefox fork. Drawing on our experience developing and maintaining the Cliqz browser, also a Firefox fork, over the last 5 years, we wanted to figure out a way to have as lightweight as possible fork, while also making the browser differentiated from vanilla Firefox.

Working on a fork of a high velocity project is challenging for several reasons:

- The rapid release schedule upstream can overwhelm you with merge commits. If this is not managed well, most of your bandwidth can be taken up with keeping up with upstream changes.

- Adding new features is hard, because you don’t have control over how upstream changes to components you depend upon might change.

- The size and complexity of the project makes it difficult to debug issues, or understand why something may not be working.

This post describes how we approached our new Firefox fork, and the tooling we build to enable a simple workflow for keeping up with upstream with minimal developer cost. The core tenets of this approach are:

- When possible, use the Firefox configuration and branding system for customizations. Firefox has many options for toggling features at build and runtime which we can leverage without touching source code.

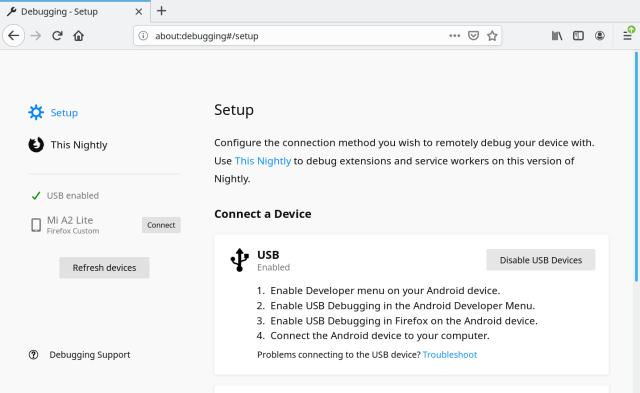

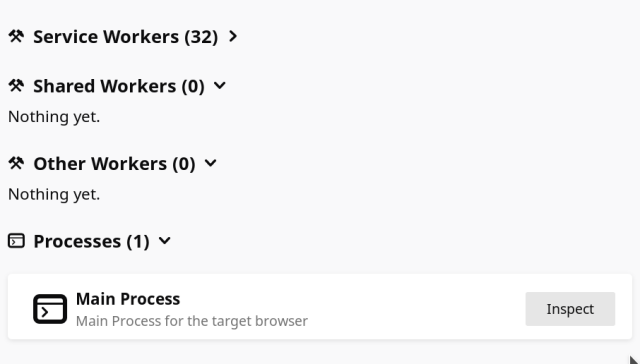

- Bundle extensions to provide custom browser features. We can, for example ship adblocking and antitracking privacy features by simple bundling the Ghostery browser extension. For features that cannot be implemented using current webextension APIs, experimental APIs can be used to build features using internal Firefox APIs that are usually off-limits to extensions.

- Changes that cannot be done via configuration or extension, we can patch Firefox code. Ideally, patches should be small and targeted to specific features, so rebasing them on top of newer versions of Firefox is as easy as possible.

fern.js workflow

Fern is the tool we built for our workflow. It automates the process of fetching a specific version of Firefox source, and applying the changes needed to it to build our fork. Given a Firefox version, it will:

- fetch the source dump for that version,

- copy branding and extensions into the source tree,

- apply some automated patches, and

- apply manual patches.

The fern uses a config file to specify which version of Firefox we are using, and which addons we should fetch and bundle with the browser.

Customising via build flags

Firefox builds are configured by a so-called mozconfig file. This is essentially a script that sets up environment variables and compiler options for the build process. Most of these options concern setting up the build toolchain, but there is also scope for customising some browser features which can be included or excluded via preprocessor statements.

For the Ghostery browser we specify a few mozconfig options to, most importantly, change the name of browser and it’s executable:

ac_add_options --with-app-name=Ghostery will change the binary name generated by the build to Ghostery, instead of firefox.export MOZ_APP_PROFILE="Ghostery Browser” changes the application name seen by the OS.ac_add_options --disable-crashreporter Disables the crash reporter applicationac_add_options MOZ_TELEMETRY_REPORTING= Disables all browser telemetry

Branding

Firefox includes a powerful branding system, which allows all icons to be changed via a single mozconfig option. This is how Mozilla ship their various varients, such as Developer Edition and Nightly, which have different colour schemes and logos.

We can switch the browser branding with ac_add_options --with-branding=browser/branding/ghostery in our mozconfig. The build will then look into the given directory for various brand assets, and we just have to mirror the correct path structure.

Handily, this also includes a .js file where we can override pref defaults. Prefs are a Firefox key-value store which can be used for runtime configuration of the browser, and allow us to further customise the browser.

For the Ghostery browser, we use this file to disable some built in Firefox features, such as pocket and Firefox VPN, and tweak various privacy settings for maximum protection. This is also where we configure endpoints for certain features such as safe browsing and remote settings, so that the browser does not connect to Mozilla endpoints for these.

Bundling extensions

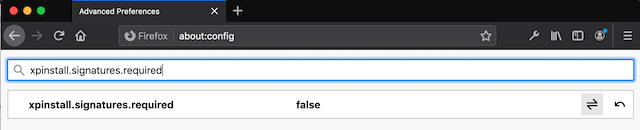

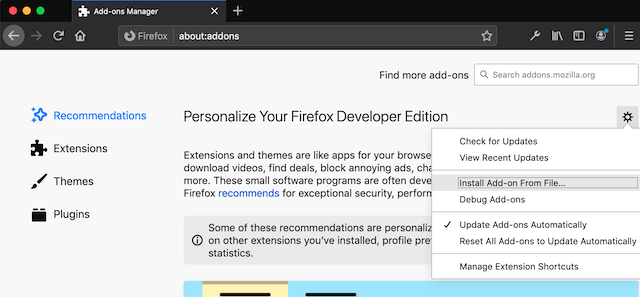

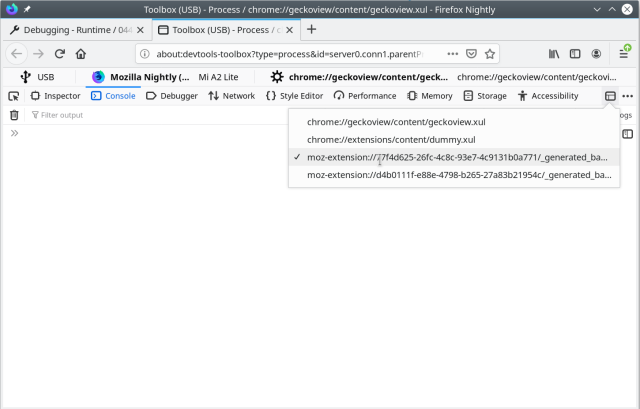

As we are using extensions for core browser features, we need to be able to bundle them such that they are installed on first start, and cannot be uninstalled. Additionally, to use experimental extension APIs they have to be loaded in a privileged state. Luckily Firefox already has a mechanism for this.

Firstly, we put the unpacked extension into the browser/extensions/ folder of the Firefox source. Secondly, we need to create a moz.build file in the extension’s root. This file must contain some python boilerplate which declares all of the files in the extension. Finally, we patch browser/extensions/moz.build to add the name of the folder we just created to the DIRS list.

This process is mostly automated by fern. We simply specify extensions with a URL to download them inside the .workspace file, and these will be downloaded, extracted, copied to the correct location, and with the moz.build boilerplate generated. The last manual step is to add the extensions to the list of bundled extensions, which we can do with a small patch.

Building

Once we have our browser source with our desired config changes, bundled extensions and some minor code changes, we need to build it. Building Firefox from source locally is relatively simple. The ./mach bootstrap command handles fetching of the toolchains you need to build the browser for your current platform. This is great for a local development setup, but for CI we have extra requirements:

- Cross-builds: Our build servers run linux, so we'd like to be able to build Mac and Windows versions on those machines without emulation.

- Reproducable builds: We should be able to easily reproduce a build that the CI built, i.e. build the same source with the same toolchain.

Mozilla already do cross-builds so we can glean from their CI setup, taskcluster, how to emulate their build setup. Firefox builds are dockerized on taskcluster, so we do the same, using the same Debian 10 base image and installing the same base dependencies. This base dockerfile is used as a base for platform specific dockerfiles.

Mozilla's taskcluster CI system defines not only how to build Firefox from source, but also how to build the entire toolchain required for the final build. Builds are defined in yaml inside the Firefox source tree. For example we can look at linux.yml for the linux64/opt build which describes an optimised linux 64-bit build. To make our docker images for cross-platform browser builds we use these configs to find the list of toolchains we need for the build. We can therefore go from a Firefox build config for a specific platform, to Dockerfile with the following steps:

- Extract the list of toolchain dependencies for the build from the taskcluster definitions.

- Find the artifact name for each toolchain (also in taskcluster definitions).

- Fetch the toolchain artifact from Mozilla's taskcluster services.

- Snapshot the artifact with IPFS so we have a perma-link to this exact version of the artifact.

- Add the commands to fetch and extract the artifact in the dockerfile.

This gives us a dockerfile that will always use the same combination of toolchains for the build. In our dockerfiles we also mimic taskcluster path structure, allow us to directly use mozconfigs from Firefox source to configure the build to use these toolchains. The platform dockerfiles, and mozconfigs are committed to the repo, so that the entire build setup is snapshotted in a commit, and can be reproduced (provided the artifacts remain available on IPFS).

Summary

This post has given a quick overview of how we set up the user-agent-desktop project for the new Ghostery browser as a lightweight Firefox fork.

The main benefit of this approach can be seen when merging new versions of Firefox from upstream. With the Cliqz hard fork we often ended up with merge diffs with in the order of 1 million changes, and multiple conflicts to resolve. In contrast, under the user-agent-desktop workflow, we just point to the updated mozilla source, then some minor changes to manual patches so that they still apply properly, creating less than 100 lines of diff. What used to be a multi-week task of merging a new Firefox major version, is now less than half a day for a single developer.